We all know Google has many factors to ranking webpages and to get rank to be visible on search result search engine optimization is the best way. However, there is an important step before starting to optimize our website and create content. we need to make sure search engines can crawl our pages. This is called “technical SEO”. It won’t be wrong if we claim technical optimization is the foundation of any SEO efforts and without that all SEO activities would be useless.

each objective has more detail that we talk about them later.

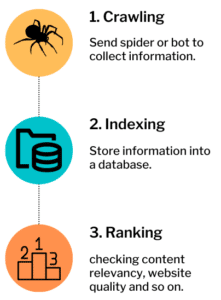

Generally speaking, all search engines need to do three processes to rank your webpage. These are crawling, indexing, and ranking. We are going to explain each step then you will have a better understanding of what search engines do in the background.

In the first step, search engines try to discover information that is available online. They do this by spiders or bots. The bots collect information like URLs, keywords, type of content, internal and external links and so on.

They store all information in a database that is collected by bots. To know how many of your web pages are indexed by Google, for instance, just search for “site:YourDomain.com”. Then you can see how many pages are already indexed by Google.

The last step would be checking the seven main criteria.

Now it is time to start your technical optimization. But before that, you need to check three main things first.

Google Search Console is the famous one and because Google has the most market share in searching digital marketers know about that. Also, the Bing webmaster tool is another one for someone who wants to get ranked and recognized by the Bing search engine. The other options are Yandex for Russia which is helpful for those sites that want to work on that region’s online market. The last one is Baidu for China to make your website crawlable there.

Furthermore, it would be helpful if you use tools to manage your SEO. For websites that use WordPress, Yoast is one of the best options and it will help a lot to give you an insight into your pages and content SEO elements. The last tool that you need to set up is Google Analytics. It shows you how your traffic is and provides a lot of reports to help experts.

But as technical features, we would like to start with XML Sitemap. The main task of this feature is to help search engines to crawl your site more efficiently and index faster. For a website with many pages, it would be difficult for crawlers to find pages and their relationship especially if you do not have a good internal link structure. For instance, if you have a page with no link to others (we called an “orphan page”) sitemap can introduce it to search engine crawling bots.

The next file is Robots.txt and it has several functions. It is the first thing that spiders check. In this file, you can mention which page will be crawlable and which will not. For example, you won’t pages like employees or paid member pages crawled by search engines. This file has four different parts: user agent, allow, disallow, and sitemap. You can determine which bot (like Googlebot or Bingbot) can communicate with your website. Further, you can allow pages to crawl by bots or mention which pages are disallowed. Lastly, you are able to write your website sitemap address there.

Note: search engines like Google can crawl your website without these features but if you have those files and structured them correctly, it would be more precise and faster.

To improve user experience technically, you need to check your website’s responsiveness. It is vital to ensure your website’s mobile version is working properly because Google and other search engines penalized you if your website is not mobile-friendly.

Then check your website uptime. It is important to have a reliable hosting service and if you realize your website shut down many times it is better to contact your hosting.

HTML sitemap is another feature that can help you technically. The XML sitemap basically is for search engine crawling but the HTML sitemap would be a page of your website to illustrate the links of your website.

Another feature is to make sure you have social media buttons to help your visitors share the contents of your website on those platforms.

The next will be managing the URLs that you already changed or will change. Ensure you are managing these redirections because you may lose backlinks especially if you permanently redirect a page. (301 redirects) Also, it is important to check all links to avoid broken links. (404 errors) Search them and delete the broken links or redirect them to the new and valid links.

SSL certificate is another feature and you need to ask your host for that or use services like Cloudflare. In addition to making your address secure, there is another feature like Content Delivery Network (CDN) that helps your website load faster, especially if you have an international market.

The last but not the least is website page speed. It was mentioned by Google that is one of the most important factors for ranking and you need to take it seriously. You need to minimize the time by close to 3 seconds however based on websites it can be different. You need to check page speed, image optimization, video optimization, caching, optimize the database, minimize CSS, HTML, and JavaScript files, and for some websites (like CMS based) use the last PHP version.

Definitely, there are more technical optimization factors that can help your website to get rank better. Just bear in mind before optimizing your website technically, other SEO efforts like on-page, off-page or even creating more content may not improve your website ranking as you are expecting.

Diginesstor is a dedicated digital marketing agency that helps businesses expand their markets online. We focus on Search Engine Optimization services, including ON-PAGE SEO, OFF-PAGE SEO, TECHNICAL SEO, LOCAL SEO, AMAZON SEO, AND SEO AUDIT, to enhance your online visibility!

info@diginesstor.com

Online Support236-516-4002

Mon-Fri 9am-5pm (PST)Get Subscribed!

[mailpoet_form id="1"]| Cookie | Duration | Description |

|---|---|---|

| cookielawinfo-checkbox-analytics | 11 months | This cookie is set by GDPR Cookie Consent plugin. The cookie is used to store the user consent for the cookies in the category "Analytics". |

| cookielawinfo-checkbox-functional | 11 months | The cookie is set by GDPR cookie consent to record the user consent for the cookies in the category "Functional". |

| cookielawinfo-checkbox-necessary | 11 months | This cookie is set by GDPR Cookie Consent plugin. The cookies is used to store the user consent for the cookies in the category "Necessary". |

| cookielawinfo-checkbox-others | 11 months | This cookie is set by GDPR Cookie Consent plugin. The cookie is used to store the user consent for the cookies in the category "Other. |

| cookielawinfo-checkbox-performance | 11 months | This cookie is set by GDPR Cookie Consent plugin. The cookie is used to store the user consent for the cookies in the category "Performance". |

| viewed_cookie_policy | 11 months | The cookie is set by the GDPR Cookie Consent plugin and is used to store whether or not user has consented to the use of cookies. It does not store any personal data. |